By Ankit Srivastava

Data is the lifeblood of the digital economy. Every decision, every recommendation, and every forecast depends on the quality and accessibility of data. But behind every seamless data-driven process stands a professional who ensures that data is available, reliable, secure, and scalable — the Data Platform Engineer.

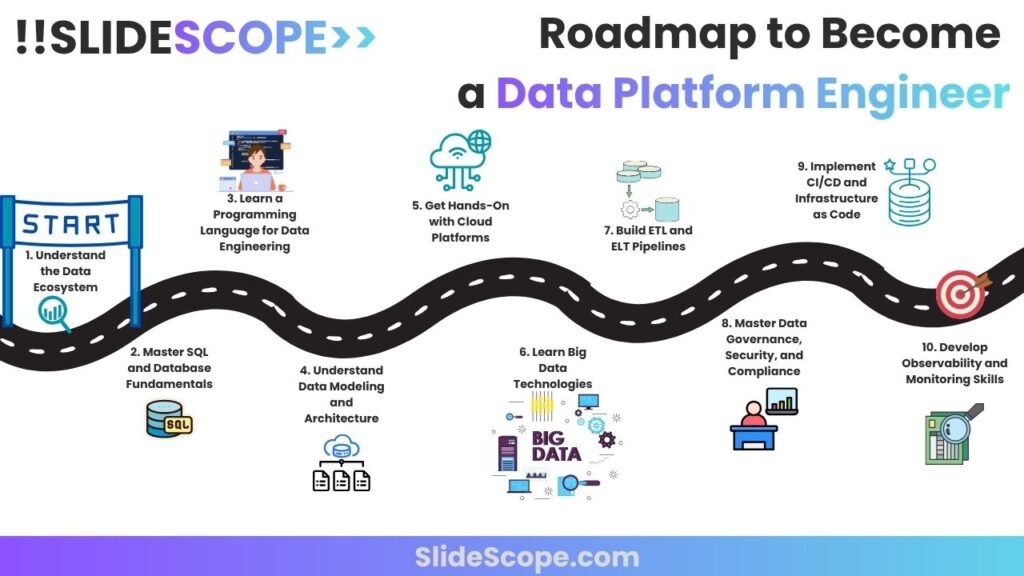

If you’ve ever wondered how to start a career in this domain or how to transition from a data analyst or software developer role, this guide will walk you through the 10 essential steps to becoming a successful Data Platform Engineer.

1. Understand the Data Ecosystem

Before diving into tools and technologies, it’s important to understand the ecosystem you’ll be working in. Data doesn’t just appear — it’s collected, processed, stored, and transformed across multiple systems before it reaches analysts or business users.

Learn how different systems interact — from transactional databases to data lakes, ETL processes, and analytics dashboards. You should be able to visualize data flow from raw ingestion (APIs, sensors, files) to the final analytical reports in Power BI, Tableau, or Looker.

📘 Tip: Study architectures like the modern data stack, data mesh, and data fabric — they’ll give you clarity on how enterprises organize large-scale data operations today.

2. Master SQL and Database Fundamentals

No matter how advanced data engineering becomes, SQL remains its backbone. A Data Platform Engineer must write efficient queries, design schemas, and ensure optimal performance for both transactional (OLTP) and analytical (OLAP) systems.

Start with basics like SELECT, JOIN, and GROUP BY, and gradually move to stored procedures, window functions, and query optimization. Understand how indexing, normalization, and caching work.

Hands-on practice is key. Set up your own PostgreSQL or MySQL instance and experiment with real datasets.

💡 Pro Tip: Explore cloud-native databases such as Amazon Redshift, Google BigQuery, or Azure Synapse — they are integral to modern platforms.

3. Learn a Programming Language for Data Engineering

While SQL handles querying, you’ll need a scripting or programming language to automate and orchestrate data workflows.

Python is the most preferred choice. It integrates seamlessly with APIs, data sources, and cloud environments. Learn libraries like Pandas for data transformation, SQLAlchemy for database connections, and PySpark for big data processing.

Alternatively, Scala is popular for Spark-based architectures, and Java remains relevant in legacy enterprise systems.

🚀 Your Goal: Be able to build and automate small ETL workflows using Python scripts connected to SQL databases.

4. Understand Data Modeling and Architecture

Designing efficient data models is both an art and a science. You must understand how to structure data for analytical speed, scalability, and storage optimization.

Start with the basics of dimensional modeling — facts, dimensions, and hierarchies. Learn how star and snowflake schemas are designed for analytics.

Also, explore data normalization vs denormalization, data marts, and OLAP cubes. This knowledge helps you ensure your platform supports accurate and fast business reporting.

🎯 Example: In a retail dataset, a Sales Fact Table may connect to Customer, Product, and Time Dimension Tables — this structure powers dashboards and KPI analysis efficiently.

5. Get Hands-On with Cloud Platforms

Most organizations have migrated or are migrating to the cloud. As a Data Platform Engineer, you must know how to build and maintain data systems on cloud infrastructure.

Pick one cloud provider and go deep:

- AWS: Learn S3, Glue, Athena, Redshift, and Lambda.

- Azure: Explore Data Lake, Synapse Analytics, and Data Factory.

- Google Cloud: Practice BigQuery, Dataflow, and Dataproc.

Understand data security, scalability, and cost optimization. Get a certification — like AWS Certified Data Engineer or Azure Data Engineer Associate — it adds huge credibility to your profile.

☁️ Pro Tip: Learn how data is stored in object storage, moved via pipelines, and queried in data warehouses.

6. Learn Big Data Technologies

Enterprises often deal with petabytes of data flowing in real-time. To manage this, you need to understand Big Data ecosystems.

Start with Apache Hadoop, which introduced distributed storage and processing. Then move to Apache Spark, the industry standard for large-scale data computation. Learn Apache Kafka for real-time data streaming.

Big data frameworks help process structured and unstructured data efficiently.

💡 Example: A Data Platform Engineer at Netflix might use Kafka to stream real-time user activity data into Spark, which processes it before storing insights in S3 for analytics.

7. Build ETL and ELT Pipelines

Your main responsibility will be ensuring that data flows smoothly across systems. This means building reliable ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform) pipelines.

Learn pipeline tools like:

- Apache Airflow (workflow orchestration)

- dbt (data transformation)

- Azure Data Factory / AWS Glue (data integration)

You’ll handle scheduling, dependency management, and error recovery. Learn about incremental loading, data validation, and monitoring.

🎯 Goal: Be able to automate daily data ingestion from multiple sources (APIs, CSVs, databases) into a warehouse.

8. Master Data Governance, Security, and Compliance

With great data power comes great responsibility. You must ensure that the data platform complies with governance and privacy rules.

Understand data lineage, cataloging, and metadata management. These concepts help you track where data comes from and how it’s used.

Security is equally critical — implement IAM roles, data masking, encryption, and access controls.

📘 Regulatory Frameworks: Familiarize yourself with GDPR, CCPA, and HIPAA — especially if you work with user or healthcare data.

A well-governed data platform ensures trust and transparency across the organization.

9. Implement CI/CD and Infrastructure as Code

Modern data teams align with DevOps practices. Automation ensures scalability and stability.

Learn to build CI/CD pipelines using tools like GitHub Actions, Azure DevOps, or Jenkins to automate code deployment.

Also, explore Infrastructure as Code (IaC) using Terraform, AWS CloudFormation, or Azure Bicep — this allows you to deploy data infrastructure consistently across environments.

🐳 Tip: Containerization tools like Docker and Kubernetes are essential for scalable, portable data services.

10. Develop Observability and Monitoring Skills

The job doesn’t end when a pipeline runs — it continues with ensuring reliability and uptime.

Learn to implement monitoring, alerting, and logging for your data systems. Tools like Prometheus, Grafana, and CloudWatch help track performance and failures.

Additionally, incorporate data quality checks using tools like Great Expectations or Monte Carlo to ensure the accuracy and consistency of ingested data.

A mature Data Platform Engineer sets up systems that self-monitor and self-heal.

Becoming a Data Platform Engineer isn’t about mastering a single tool — it’s about building a holistic understanding of data infrastructure, automation, and governance.

Start small — learn SQL, practice Python scripts, then move toward building pipelines and cloud data architectures. Over time, you’ll develop the expertise to manage complex data ecosystems that power real-world decision-making.

The future of data engineering lies in scalability, automation, and intelligence — and as a Data Platform Engineer, you’ll be at the center of it all.