Becoming a Cloud Data Engineer is one of the most rewarding and future-proof career paths in the data world. As enterprises rapidly migrate to cloud platforms, the demand for professionals who can design scalable data pipelines, build modern data architectures, and manage real-time data workflows is growing exponentially.

A Cloud Data Engineer combines the foundations of data engineering with advanced cloud technologies, ensuring data flows securely, efficiently, and reliably across distributed systems. This role is no longer limited to managing traditional databases — it now involves designing data lakes, lakehouses, event-driven pipelines, serverless workflows, and integrating AI-ready data systems.

Whether you’re transitioning from a software development background, a data analyst role, or starting fresh, mastering cloud data engineering opens doors to lucrative opportunities across technology, healthcare, BFSI, retail, consulting, and more. With the right learning roadmap, hands-on practice, and real-world cloud projects, you can position yourself as a high-value professional capable of powering data-driven decisions in modern organizations.

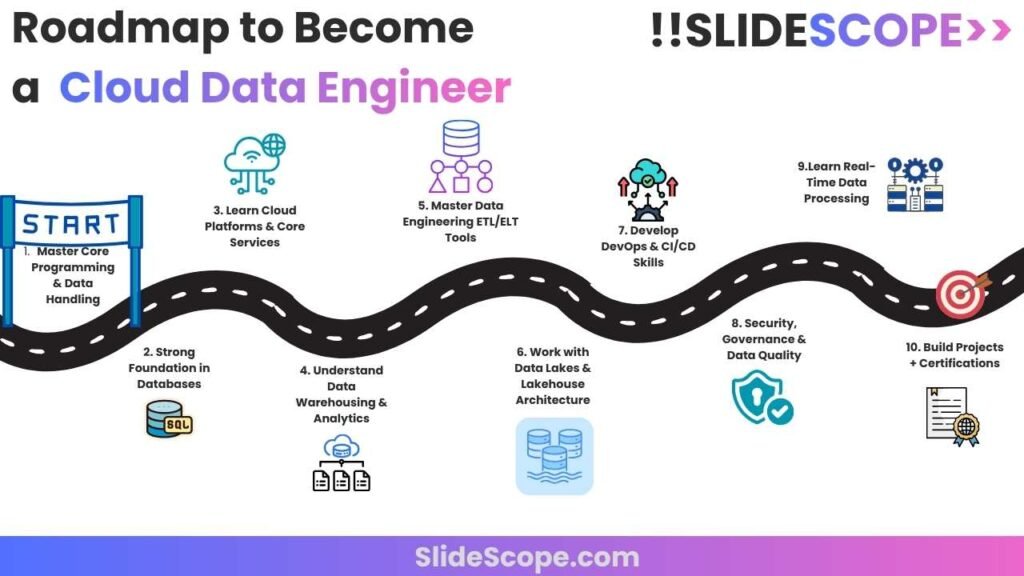

In this guide, we break down a structured 10-step roadmap to help you build skills in cloud platforms, data engineering tools, workflows, and industry-best practices, preparing you to confidently step into a Cloud Data Engineer role. Let’s get started!

Below is the 10-Point Roadmap to become a Cloud Data Engineer:

Roadmap

- Master Core Programming & Data Handling

- Learn Python (Pandas, NumPy), SQL, and basic scripting (Bash)

- Understand data types, data modeling, and ETL concepts

- Strong Foundation in Databases

- Relational: MySQL, PostgreSQL, SQL Server

- NoSQL: MongoDB, Cassandra, DynamoDB

- Hands-on with schema design, partitioning & indexing

- Learn Cloud Platforms & Core Services

- Pick one cloud platform first (AWS/Azure/GCP)

- Key services to learn:

AWS → S3, Redshift, RDS, Lambda, Glue, EMR

Azure → ADLS, Synapse, Data Factory, Databricks

GCP → BigQuery, Dataflow, Cloud Storage, Dataproc

- Understand Data Warehousing & Analytics

- Learn star/snowflake schema

- Columnar storage & MPP systems

- Tools: Snowflake, BigQuery, Redshift, Synapse

- Master Data Engineering ETL/ELT Tools

- Apache Airflow, AWS Glue, Azure Data Factory

- dbt for transformations

- Spark for distributed processing

- Work with Data Lakes & Lakehouse Architecture

- Learn Data Lake concepts, Parquet files, Delta Lake

- Tools: Databricks, Apache Iceberg, S3/ADLS

- Develop DevOps & CI/CD Skills

- Git & GitHub

- CI/CD pipelines: GitHub Actions, Azure DevOps, AWS CodePipeline

- Container basics: Docker, Kubernetes (optional but recommended)

- Security, Governance & Data Quality

- Identity & Access Management (IAM)

- Data encryption, VPC, firewall rules

- Data quality tools: Great Expectations, Monte Carlo (optional)

- Learn Real-Time Data Processing

- Kafka, Kinesis, Pub/Sub

- Spark Streaming / Flink / Databricks

- Build Projects + Certifications

- Sample projects:

✅ Cloud-based ETL Pipeline

✅ Real-time analytics dashboard

✅ Data Lake + Warehouse + BI stack - Certifications:

- AWS Data Engineer

- Azure Data Engineer Associate

- Google Professional Data Engineer

🎯 Bonus Tips

- Start small → Build end-to-end projects

- Showcase skills on GitHub & LinkedIn

- Practice SQL + Python daily

- Follow latest cloud services updates