By Ankit Srivastava

In the evolving world of SEO, the term “passive indexing” is beginning to surface with increasing significance. But what exactly does it mean? And how does it differ from legacy (or traditional) indexing methods and the more modern “active” or “proactive” indexing strategies? In this article I’ll walk you through the concept of passive indexing, how it fits into the broader indexing journey, how search engines have changed their approach, and what you as an SEO practitioner should do in 2025 and beyond.

1. Indexing 101: Setting the Stage

Before we dive into passive indexing, let’s quickly recap what indexing means in the SEO world.

Search engines like Google crawl the web (the crawling phase), discover new URLs, parse their content, evaluate them, and then store relevant information in their internal database (the index).

Only when a URL is indexed can it even appear in search engine results pages (SERPs). If your page is not indexed — no matter how great the content — it may as well be invisible for organic search purposes.

Here’s a simplified flow:

flowchart LR

A[Crawling] --> B[Rendering & Parsing]

B --> C[Indexing]

C --> D[Ranking & Serving]

2. What is Passive Indexing?

Passive indexing refers to a scenario where you, as a website owner or SEO practitioner, largely rely on the search engine to discover, crawl, and index your pages — without taking proactive steps to signal or manage indexing.

In other words: you publish content (or maintain pages), host them on your site, maybe submit a sitemap, but you don’t actively push or manage how and when those pages get indexed. You trust the search engine bots to do the job(passively).

In older SEO models, this was the default. Many resources describe passive indexing as simply “doing nothing” after publishing and waiting for Googlebot to find you. (mangools)

Key characteristics of passive indexing:

- No special URL submission or indexing API calls.

- Let search engine crawlers discover via internal/external links.

- Minimal management of indexing timing or prioritisation.

- Delay in indexing is accepted as “normal”.

3. Why Passive Indexing Was Once Acceptable

In the earlier era of SEO (say 2010s), the web ecosystem was smaller, crawl budgets generous, and competition for indexing limited. Many websites could perform well by simply publishing well-optimized pages and letting search engines do the rest.

Benefits of this approach included:

- Low technical overhead (you didn’t need to drill deep into indexing workflows).

- Simplicity — focus on content quality rather than indexing mechanics.

- Works reasonably well for smaller sites with few changes or updates.

However, as search volume, content creation, and competition increased, the limitations of passive indexing became evident.

4. The Shift: Active & Proactive Indexing

Modern SEO increasingly emphasizes active or proactive indexing — where you actively signal search engines, optimise crawl/index budgets, monitor index status, and treat indexing as a strategic rather than passive process. For example:

- Submitting updated XML sitemaps, or using indexing protocols like IndexNow. (Wikipedia)

- Using the URL Inspection tool in Google Search Console to request indexing. (mangools)

- Monitoring log files, crawl budget consumption, and index coverage error reports. (Oncrawl – Technical SEO Data)

This shift is largely driven by the need for speed (fresh content ranking sooner), scale (large sites with thousands of pages) and precision (ensuring important pages are indexed quickly and accurately).

5. Passive vs Active (vs Proactive) — Side-by-Side

| Approach | Description | Typical Tactics | Use Cases |

|---|---|---|---|

| Passive Indexing | Minimal control; rely on bots to discover & index. | Publish content, sitemap submission, internal links. | Small sites, low update frequency |

| Active Indexing | Some control over index signals and workflow. | URL inspection, sitemap updates after publishing. | Medium sites, moderate update volume |

| Proactive Indexing | Full control: ongoing monitoring & automation. | IndexNow, crawl-budget management, index status tools. | Large enterprise sites, frequent new pages |

As explained by the team at OnCrawl:

“Proactive indexing turns visibility from a passive outcome into a deliberate strategy.” (Oncrawl – Technical SEO Data)

That hints at how passive indexing is no longer enough in many competitive scenarios.

6. What Passive Indexing Doesn’t Handle Well

If you rely solely on passive indexing, you may face the following issues:

- Delay in indexation: It may take days or weeks for new pages to be discovered and indexed. (mangools)

- Inconsistent coverage: Especially for large sites, some important pages may never be indexed if crawl budget is mis-used. (Onely)

- Poor prioritisation: Search engines might index less valuable pages while missing high-value pages.

- Less control over freshness: New content may not rank quickly if indexing is slow.

- Limited visibility into indexing status: Without active monitoring you may not notice gaps.

In competition-intense niches, any delay or gap becomes a missed opportunity for traffic or ranking advantage.

7. How to Implement Passive-Friendly but Smart Indexing Strategies

Even if you adopt a “passive indexing” mindset, you can still optimise it and reduce its drawbacks. Use these best-practices:

- Ensure crawlability: Make sure your important pages are reachable via internal links, and your robots.txt / noindex tags don’t block them.

- Submit a clear sitemap: While passive, you still give search engines a roadmap of your site. (mangools)

- Maintain high technical health: No slow pages, broken links, or duplicate content — because these hinder indexing. (Onely)

- Monitor index coverage regularly: Use Search Console or third-party tools to detect unindexed pages.

- Link-building and external signals: Passive discovery still happens via backlinks from other sites — which helps new pages get crawled.

While this doesn’t fully substitute active indexing, it makes the passive approach more reliable.

8. Why Passive Indexing is Becoming Risky

Given the dynamics of modern SEO (AI-driven search, large content volumes, speed expectations, mobile-first indexing), the margin for error is shrinking. Some reasons passive indexing is risky:

- Search engine algorithms reward freshness and rapid indexing of new content.

- IndexNow and similar protocols shift the equilibrium toward signalling rather than waiting. (Wikipedia)

- Large websites, marketplaces and e-commerce platforms can’t afford gaps in indexation — their business depends on visibility.

- Competitive content niches mean ranking advantage is gained by being indexed earlier and consistently.

Thus, passive indexing is often the baseline, but not enough to gain or maintain edge.

9. How to Transition from Passive to Proactive Indexing

If you’re an SEO or digital marketer working with a site that has meaningful scale or competition, here’s how you build a stronger indexing strategy:

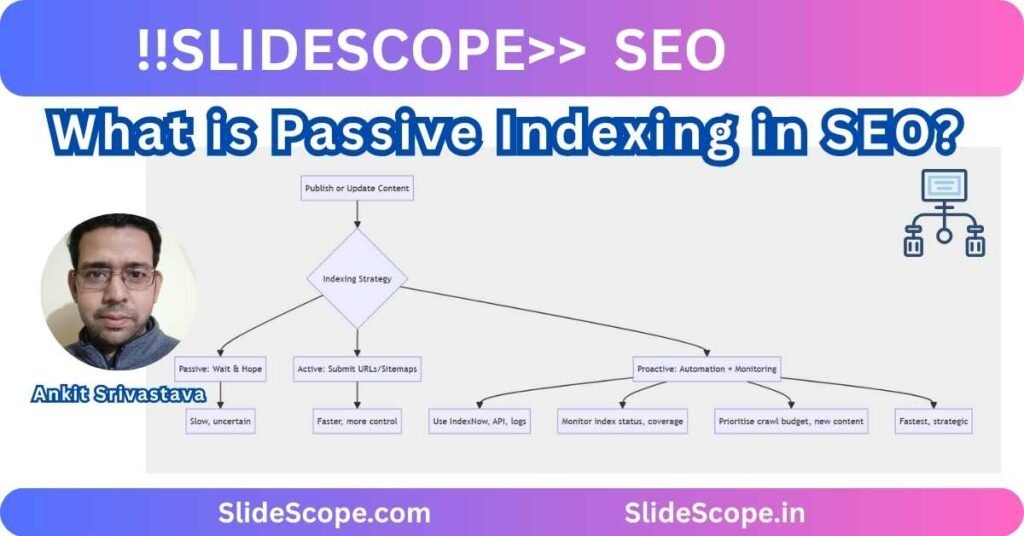

flowchart TD

A[Publish or Update Content] --> B{Indexing Strategy}

B --> C[Passive: Wait & Hope]

B --> D[Active: Submit URLs/Sitemaps]

B --> E[Proactive: Automation + Monitoring]

E --> F[Use IndexNow, API, logs]

E --> G[Monitor index status, coverage]

E --> H[Prioritise crawl budget, new content]

C --> I[Slow, uncertain]

D --> J[Faster, more control]

E --> K[Fastest, strategic]

Key steps:

- Implement URL submission protocols (IndexNow, search console API).

- Set up index coverage dashboards to measure metrics such as “time to index,” “% of target pages indexed,” “crawler usage vs index usage”.

- Use automation for critical pages (new product launches, time-sensitive updates).

- Audit and prune low-value pages so crawl budget is used efficiently.

- Combine this with high-quality content, internal linking, technical health and structured data.

10. Practical Checklist for SEO Teams

Here’s a quick checklist you can execute as Ankit would in a training session:

- ✅ Ensure each important page has a clear link from homepage or sitemap.

- ✅ Submit XML sitemap and update it when major changes occur.

- ✅ Use Search Console URL Inspection for priority pages (new launches).

- ✅ Monitor search console’s “Coverage” report weekly — check for Excluded, Valid (Indexed) statuses.

- ✅ Build an Index-status dashboard with metrics like: “Days from publish → index”, “Indexed pages / Published pages”, “Crawl budget used vs pages discovered”.

- ✅ Set up alerts for rectangular trends like sudden drop in indexed pages or crawling errors.

- ✅ Use structured data markup to signal machine readability and relevance.

- ✅ Prioritise removing or noindexing thin/duplicate content to conserve crawl budget.

11. How This Relates to AI & Future SEO

As artificial intelligence and large-language-model driven search evolve, indexing is far more than “just get the page in.” It becomes a data-signal pipeline — content that is crawled, rendered, understood, categorised and surfaced. In this context:

- Passive indexing may mean your content is in the index, but not optimally surfaced.

- Proactive indexing means you’re ensuring your content is not only included, but ready for AI-driven retrieval (structured data, entity markup, freshness signals).

- Search engines increasingly expect real-time content updates; indexing delays translate directly into SERP delays.

12. Final Thoughts

To summarise: passive indexing represents the minimalist approach — publish and wait. It may have worked in simpler times. But today, with dynamic search ecosystems, content scale, AI-driven signals and rapid competition, it’s no longer sufficient on its own.

By understanding how indexing has evolved and implementing a more active-to-proactive approach, you create more visibility, better timeliness and stronger control over your SEO outcomes.

If you’re focused on business-driven organic growth, I encourage you to review your indexing workflows, measure your index-coverage health, and move from “hope” to “strategy” when it comes to indexing.

Ready to dig deeper? In the next session we’ll explore how to build an Index-Coverage Dashboard in Power BI or Google Data Studio to monitor your indexing pipeline in real time.

#SEO #Indexing #TechnicalSEO #SearchEngineOptimization #AnkitSrivastava #DigitalMarketing #ContentStrategy